More on why the polls got it wrong

A very good guest slot on Political Betting here on the state of the debate about why the opinion polls were so badly out in the general election.

The anonymous author points out that polling errors underestimating support for centre-right parties seem to be

an international phenomenon:

"similar polling errors have occurred in other national elections this year.

In Israel, Likud were predicted to gain 22 seats (of 120) and ended up with 30, and last week in Denmark the blue block were expected to win by 1 or 2% and actually won by 5% – with the populist DPP notably outperforming their eve-of-election polling by 3% (21% to 18%).

On more limited polling, the same pattern can be seen in Finland – with the Centre Party overestimated by about 3% at the expense of the populist True Finns and centre-right National Coalition Party; in Estonia, where the winning centre-right Reform Party were underestimated; in the Croatian presidential election, where the polls didn’t give the narrow winner Kolinda Grabar-Kitarović much of a chance (though interestingly the exit polls nailed it); and in Poland’s presidential election, where Andrzej Duda’s first round victory came as a total shock.

The author of the PB article (who uses the nom-de-plume "Tissue Price") then refers to a number of articles with differing opinions on what went wrong (most of which have already been linked to on this blog.)

Herding - did the pollsters lie?

For example there was the original Dan Hodges article which said that the pollsters lied.

There has been a reply to this by Matt Singh of "Number Cruncher Politics." Where Dan Hodges accused the pollsters of adjusting their results so they "herded" together, predicting Conservatives and Labour neck-and-neck so they could claim it was "too close to call" if they got it wrong, Matt argues, making a strong but not completely conclusive case, that

"the polling failure was an industry-wide problem and the evidence doesn't support the view that herding was a cause."

Dan in turn has come back and is sticking firmly to his guns, arguing

"Yes, the pollsters lied and here's the proof."

On balance I'd say that although Dan hasn't proved deliberate deceit the balance of argument is slightly more on his side, and one particularly powerful bit of evidence which I'm surprised neither mentioned but which was rightly raised in the comments thread was the Survation poll.

As Damian Lyons Lowe, founder and CEO of Survation blogged "here," his company carried out an eve-of poll survey on 6th May which found support as follows:

CON 37%

LAB 31%

LD 10

UKIP 11

GRE 5

Others (including the SNP) 6%

e.g. very close indeed to what actually happened the following day.

In his words,

'the results seemed so “out of line” with all the polling conducted by ourselves and our peers – what poll commentators would term an “outlier” – that I “chickened out” of publishing the figures – something I’m sure I’ll always regret.'

I'm minded to believe Damian's explanation of why he didn't publish that poll, which would make this an example of herding due to (unjustified) lack of confidence in his findings rather than deliberate dishonesty.

Nevertheless, if the fact that the Survation eve-of-poll survey was pulled, and Damian's comments about why, do not constitute conclusive proof that Dan Hodges is right that there was at least some "herding" I don't know what would.

Going back to the "Tissue Price" article ...

He or she is in agreement with Peter Kellner's article, "We got it wrong. Why?" that the main problem was a classic case of so-called "shy tory" syndrome, partly caused because people did not want to stick their heads above the parapet and face hostility from left-wing friends.

Paraphrasing wildly, possibly also because some of the people who voted Conservative did not really want to admit this even to themselves, as their vote was not based on liking the Conservatives, but because when they had the pencil in one hand and the ballot paper in the other they were too scared of what Labour might do to the economy to be able to risk voting in any way that might let Labour - or worse, Labour and the SNP - into power.

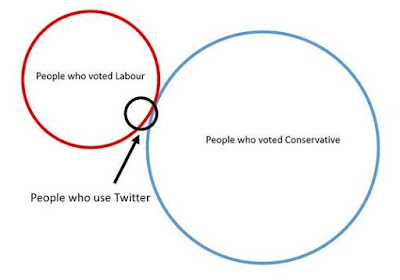

The article includes several graphics, the first a tongue-in-cheek and relatively self explanatory Venn Diagram which I understand originated with Dan Hannan MEP explaining why posts on twitter were not representative of what was about to happen in the election.

Backing up the point there were also graphs relating to data from the British Election Study posted by Philip Cowley of Nottingham University which further explained why political material on Twitter and Facebook was not representative of what was about to happen in the election: supporters of the Nationalist parties - SNP and Plaid - and the Greens were proportionately most likely to post political comment on Twitter and Facebook, then Labour supporters. Conservative, UKIP and Lib/Dem supporters were less likely than any of these to post their political views, with Conservatives the least likely of the three.

And that is in the context that the Conservative Campaign Centre was sending out vast quantities of what I think were pretty good campaign material to post on Twitter and Facebook, and most actual Conservative activists like myself were posting it.

Which must mean that by comparison with people on the left, Conservative voters other than activists were comparatively quiet. It does back up the "shy Tory" narrative.

Putting everything together I come up with three conclusions

1) Polls can be useful but do not put too much trust in them - they can also be wrong

2) Try to get your information and views from a range of sources and not just the "echo chamber" you already agree with. This particularly applies to social media (but it can apply to face-to-face conversations with friends as well.)

3) Never take an election (or referendum) result for granted.

The anonymous author points out that polling errors underestimating support for centre-right parties seem to be

an international phenomenon:

"similar polling errors have occurred in other national elections this year.

In Israel, Likud were predicted to gain 22 seats (of 120) and ended up with 30, and last week in Denmark the blue block were expected to win by 1 or 2% and actually won by 5% – with the populist DPP notably outperforming their eve-of-election polling by 3% (21% to 18%).

On more limited polling, the same pattern can be seen in Finland – with the Centre Party overestimated by about 3% at the expense of the populist True Finns and centre-right National Coalition Party; in Estonia, where the winning centre-right Reform Party were underestimated; in the Croatian presidential election, where the polls didn’t give the narrow winner Kolinda Grabar-Kitarović much of a chance (though interestingly the exit polls nailed it); and in Poland’s presidential election, where Andrzej Duda’s first round victory came as a total shock.

The author of the PB article (who uses the nom-de-plume "Tissue Price") then refers to a number of articles with differing opinions on what went wrong (most of which have already been linked to on this blog.)

Herding - did the pollsters lie?

For example there was the original Dan Hodges article which said that the pollsters lied.

There has been a reply to this by Matt Singh of "Number Cruncher Politics." Where Dan Hodges accused the pollsters of adjusting their results so they "herded" together, predicting Conservatives and Labour neck-and-neck so they could claim it was "too close to call" if they got it wrong, Matt argues, making a strong but not completely conclusive case, that

"the polling failure was an industry-wide problem and the evidence doesn't support the view that herding was a cause."

Dan in turn has come back and is sticking firmly to his guns, arguing

"Yes, the pollsters lied and here's the proof."

On balance I'd say that although Dan hasn't proved deliberate deceit the balance of argument is slightly more on his side, and one particularly powerful bit of evidence which I'm surprised neither mentioned but which was rightly raised in the comments thread was the Survation poll.

As Damian Lyons Lowe, founder and CEO of Survation blogged "here," his company carried out an eve-of poll survey on 6th May which found support as follows:

CON 37%

LAB 31%

LD 10

UKIP 11

GRE 5

Others (including the SNP) 6%

e.g. very close indeed to what actually happened the following day.

In his words,

'the results seemed so “out of line” with all the polling conducted by ourselves and our peers – what poll commentators would term an “outlier” – that I “chickened out” of publishing the figures – something I’m sure I’ll always regret.'

I'm minded to believe Damian's explanation of why he didn't publish that poll, which would make this an example of herding due to (unjustified) lack of confidence in his findings rather than deliberate dishonesty.

Nevertheless, if the fact that the Survation eve-of-poll survey was pulled, and Damian's comments about why, do not constitute conclusive proof that Dan Hodges is right that there was at least some "herding" I don't know what would.

Going back to the "Tissue Price" article ...

He or she is in agreement with Peter Kellner's article, "We got it wrong. Why?" that the main problem was a classic case of so-called "shy tory" syndrome, partly caused because people did not want to stick their heads above the parapet and face hostility from left-wing friends.

Paraphrasing wildly, possibly also because some of the people who voted Conservative did not really want to admit this even to themselves, as their vote was not based on liking the Conservatives, but because when they had the pencil in one hand and the ballot paper in the other they were too scared of what Labour might do to the economy to be able to risk voting in any way that might let Labour - or worse, Labour and the SNP - into power.

The article includes several graphics, the first a tongue-in-cheek and relatively self explanatory Venn Diagram which I understand originated with Dan Hannan MEP explaining why posts on twitter were not representative of what was about to happen in the election.

Backing up the point there were also graphs relating to data from the British Election Study posted by Philip Cowley of Nottingham University which further explained why political material on Twitter and Facebook was not representative of what was about to happen in the election: supporters of the Nationalist parties - SNP and Plaid - and the Greens were proportionately most likely to post political comment on Twitter and Facebook, then Labour supporters. Conservative, UKIP and Lib/Dem supporters were less likely than any of these to post their political views, with Conservatives the least likely of the three.

And that is in the context that the Conservative Campaign Centre was sending out vast quantities of what I think were pretty good campaign material to post on Twitter and Facebook, and most actual Conservative activists like myself were posting it.

Which must mean that by comparison with people on the left, Conservative voters other than activists were comparatively quiet. It does back up the "shy Tory" narrative.

Putting everything together I come up with three conclusions

1) Polls can be useful but do not put too much trust in them - they can also be wrong

2) Try to get your information and views from a range of sources and not just the "echo chamber" you already agree with. This particularly applies to social media (but it can apply to face-to-face conversations with friends as well.)

3) Never take an election (or referendum) result for granted.

Comments